Result Assessment Tool (RAT)

The project’s overall goal is to continue to develop and enhance the Result Assessment Tool (RAT) so that it will become a stable, flexible and sustainable tool for conducting studies that deal with the collection and analysis of data from search engines and other information retrieval systems. The Result Assessment Tool (RAT) is a software toolkit that allows researchers to conduct large-scale studies based on results from (commercial) search engines and other information retrieval systems. It consists of modules for (1) designing studies, (2) collecting data from search systems, (3) collecting judgments on the results, (4) downloading/analysing the results.

Insights

Due to the modularity, individual components can be used for a multitude of studies relating to web content, such as qualitative content analyses. Through automated scraping, web documents can be analysed quantitatively in empirical studies.

Sustainability

We will ensure the sustainability of the project results through measures in three areas: (1) Establishing and distributing the software, (2) establishing and maintaining a user and developer community, (3) publishing the software open source.

Software

The modular web-based software can automatically record data from search engines. Studies with questions and scales can be flexibly designed, and the information objects can be evaluated by jurors on the basis of the questions.

Result Assessment Tool (RAT)

A starting point to developing RAT was the fact that retrieval effectiveness studies usually require much manual work in designing the test, collecting search results, finding jurors and collecting their assessments, and in analysing the test results, as well. RAT addresses all these issues and aims to offer help in making retrieval studies more efficient and effective.

The design of the RAT prototype has been guided by the requirement of researchers to query external information retrieval systems in general, and search engines in particular. This need derives from researchers’ interest in the quality of their search results, the composition of their results (lists or pages), and potential biases in the results, to name but a few. This is a unique approach that separates RAT from software developed to aid retrieval evaluation where researchers have complete control over the systems evaluated.

RAT allows studies to be conducted under the two major evaluation paradigms, namely system-centered and user-centered evaluations.

RAT is also useful to any other researchers aiming to use results from search engines for further analysis. While the basis of RAT lies in information retrieval evaluation, its use goes well beyond this research area. Already in the prototype phase, we found that there is a significant need for a software tool like RAT in the search engine and information retrieval communities, respectively. Some projects have already demonstrated this.

Use the demo version at http://rat-software.org/home

RAT Community Meeting 2023

The first RAT Community Meeting brought together researchers interested in using search engine data in their research.

Some highlights from the program were:

- Showcases: Gain insights into how esteemed researchers leverage RAT to study a diverse range of topics, such as climate change, misinformation, health, and many more.

- Tutorials: Learn how to utilize this powerful tool effectively in your research endeavours, from data collection to in-depth analysis. Enhance your skills and unlock the potential of RAT.

- Poster Session: See how RAT has been used in various research projects and how the functionality of RAT has been extended through add-ons.

Showcases and extensions

Welcome

Dirk Lewandowski

University of Duisburg-Essen, Hamburg University of Applied Sciences

The Query Sampler

Nurce Yagci,

Hamburg University of Applied Sciences

The SEO Classifier

Sebastian Sünkler;

Hamburg University of Applied Sciences

Rammstein Debate

Sebastian Sünkler,

Hamburg University of Applied Sciences

Greyzone Medicines

Olof Sundin, Kristofer Söderström, Lund University

Mapping politics of exclusion

Ov Cristian Norocel, Lund University

Political candidates and SEO

Kay Hinz,

Agrus Data Insights

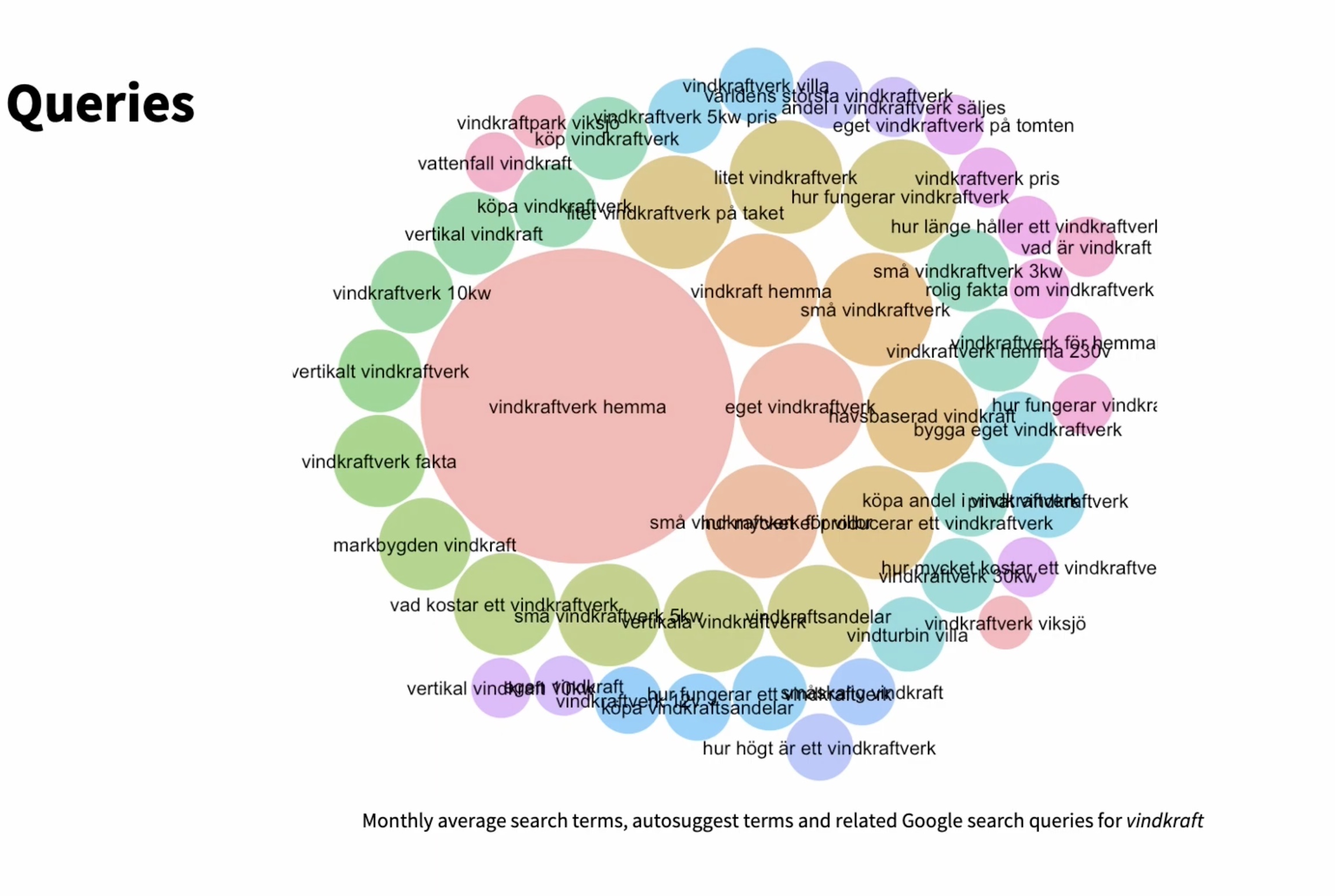

Understanding wind power

Björn Ekström,

Swedish School of Library and Information Science,

University of Borås

Poster Session

Googling for diseases

Sebastian Schultheiß,

Hamburg University of Applied Sciences

EI-Logger Plug-In

Hossam Al Mustafa,

University of Duisburg-Essen

Comparison of Climate Related Results

Tuhina Kumar,

University of Duisburg-Essen

Readability of Web Documents

Mohamed Elnaggar,

University of Duisburg-Essen

Imprint Crawler

Marius Messer,

University of Duisburg-Essen

Insurance offerings on Google

Sebastian Sünkler,

Hamburg University of Applied Sciences

Automated web page classification

Nurce Yagci,

Hamburg University of Applied Sciences

Sentiment analysis

Nimesh Ghimire,

University of Duisburg-Essen

Fact checks and ‘fake news’ in Google results

Kamila Koronska,

University of Amsterdam and AFP

Source Distribution

Helena Häußler,

Hamburg University of Applied Science

- “EI-Logger Plug-In: Explicit and implicit Logger for elicitation of search engine user’s data“, Hossam Al Mustafa, University of Duisburg-Essen

- “Measuring the Readability of Web Documents“, Mohamed Elnaggar, University of Duisburg-Essen

- “Sentiment analysis for search result snippets“, Nimesh Ghimire, University of Duisburg-Essen

- “A Comparison of Source Distribution and Result Overlap in Web Search Engines“, Helena Häußler, Hamburg University of Applied Sciences

- “A forum scraper for the Result Assessment Tool (RAT)", Paul Kirch, University of Duisburg-Essen

- “The competition between fact checks and ‘fake news’ in Google results“, Kamila Koronska, University of Amsterdam and AFP

- “Comparison of Results Shown by Different Search Engines for Climate-Related Topics“, Tuhina Kumar, University of Duisburg-Essen

- “Imprint crawler – a web crawler specialized in the automatic imprint extraction of websites under consideration of the German Legislation“, Marius Messer, University of Duisburg-Essen

- “Googling for diseases: Exploring source types, SEO measures, and information quality of health-related search results“, Sebastian Schultheiß, Hamburg University of Applied Sciences

- “What does Google recommend when you want to compare insurance offerings? – A study investigating source distribution in Google’s top search results“, Sebastian Sünkler, Hamburg University of Applied Sciences

- “Automated web page classification: A systematic literature review“, Nurce Yagci, Hamburg University of Applied Sciences

Publications

Schultheiß, S.; Lewandowski, D.; von Mach, S.; Yagci, N. (2023). Query sampler: generating query sets for analyzing search engines using keyword research tools. In: PeerJ Computer Science 9(e1421). http://doi.org/10.7717/peerj-cs.1421

Lewandowski, D., & Sünkler, S. (2019). Das Relevance Assessment Tool. Eine modulare Software zur Unterstützung bei der Durchführung vielfältiger Studien mit Suchmaschinen. In: Information – Wissenschaft & Praxis 70 (1), 46-56. https://doi.org/10.1515/iwp-2019-0007

Lewandowski, Dirk; Sünkler, Sebastian: Relevance Assessment Tool: Ein Werkzeug zum Design von Retrievaltests sowie zur weitgehend automatisierten Erfassung, Aufbereitung und Auswertung der Daten. In: Proceedings der 2. DGI-Konferenz: Social Media und Web Science – Das Web als Lebensraum. Frankfurt am Main: DGI, 2012, S. 237-249.

Lewandowski, D.; Sünkler, S.: Designing search engine retrieval effectiveness tests with RAT. Information Services & Use 33(1), 53-59, 2013. https://doi.org/10.3233/ISU-130691

Presentations

Sünkler S.; Yagci, N.; Schultheiß, S.; von Mach, S.; Lewandowski, D.; (2024) Result Assessment Tool Software to Support Studies Based on Data from Search Engines In: Part of the book series: Lecture Notes in Computer Science https://link.springer.com/chapter/10.1007/978-3-031-56069-9_19

Sünkler, S.; Yagci, N.; Sygulla, D.; von Mach, S.; Schultheiß, S., Lewandowski, D.; (2023). Result Assessment Tool (RAT): A Software Toolkit for Conducting Studies Based on Search Results. In: Proceedings of the Association for Information Science and Technology https://doi.org/10.1002/pra2.972

Schultheiß, S.; Sünkler, S.; Yagci, N.; Sygulla, D.; von Mach, S.; Lewandowski, D.; (2023). Simplify your Search Engine Research : wie das Result Assessment Tool (RAT) Studien auf der Basis von Suchergebnissen unterstützt. In: Proceedings des 17. Internationalen Symposiums für Informationswissenschaft (ISI 2023), 429-437. PDF proceedings; PDF article

Sünkler, S.; Yagci, N.; Sygulla, D.; von Mach, S.; Schultheiß, S.; Lewandowski, D.; (2023). Result Assessment Tool (RAT): Software-Toolkit für die Durchführung von Studien auf der Grundlage von Suchergebnissen. In: Proceedings des 17. Internationalen Symposiums für Informationswissenschaft (ISI 2023), 438-444. PDF proceedings ; PDF article

Sünkler, S., Yagci, N., Sygulla, D., von Mach, S., Schultheiß, S. Lewandowski, D. (2022). Result Assessment Tool (RAT). Informationswissenschaft im Wandel. Wissenschaftliche Tagung 2022 (IWWT22), Düsseldorf. PDF proceedings; PDF article

Research that used RAT to collect and analyse data (selected)

Ekström, B., & Tattersall Wallin, E. (2023). Simple questions for complex matters?: An enquiry into Swedish Google search queries on wind power. Nordic Journal of Library and Information Studies, 4(1), 34–50. https://doi.org/10.7146/njlis.v4i1.136246

Norocel, O.C.; Lewandowski, D. (2023):. Google, data voids, and the dynamics of the politics of exclusion. In: Big Data & Society. https://doi.org/10.1177/205395172211490

Haider, J.; Ekström, B.: Tattersall Wallin, E.; Gunnarsson Lorentzen, D.; Rödl, M.; Söderberg, N. (2023). Tracing online information aboutwind power in Sweden: An exploratory quantitative study of broader trends. https://www.diva-portal.org/smash/get/diva2:1740876/FULLTEXT01.pdf

Sünkler, S.; Lewandowski, D.: Does it matter which search engine is used? A user study using post-task relevance judgments. In: Proceedings of the 80th Annual Meeting of the Association of Information Science and Technology, Crystal City, VA, USA. https://doi.org/10.1002/pra2.2017.14505401044

Schaer, P.; Mayr, P.; Sünkler, S.; Lewandowski, D.: How Relevant is the Long Tail? A Relevance Assessment Study on Million Short. In: N. Fuhr et al. (eds.): Experimental IR Meets Multilinguality, Multimodality, and Interaction (Lecture Notes in Computer Science, Vol. 9822), pp. 227-233. https://doi.org/10.1007/978-3-319-44564-9_20

Behnert, C.: LibRank: New Approaches for Relevance Ranking in Library Information Systems. In: Pehar, F.; Schlögl, C.; Wolff, C. (eds.): Re:inventing Information Science in the Networked Society. Proceedings of the 14th International Symposium on Information Science (ISI 2015). Glückstadt: Verlag Werner Hülsbusch, 2015. S. 570-572

Lewandowski, D.: Evaluating the retrieval effectiveness of web search engines using a representative query sample. In: Journal of the American Society for Information Science and Technology (JASIST) Vol. 66 (2015) Nr. 9, p. 1763 – 1775. https://doi.org/10.1002/asi.23304

Lewandowski, D.: Verwendung von Skalenbewertungen in der Evaluierung von Web-Suchmaschinen. In: Hobohm, H.-C. (Hrsg.): Informationswissenschaft zwischen virtueller Infrastruktur und materiellen Lebenswelten. Proceedings des 13. Internationalen Symposiums für Informationswissenschaft (ISI 2013). Boizenburg: Verlag Werner Hülsbusch, 2013. S. 339-348.

Working papers

Sünkler, S. & Yagci, N. (2023). Technical documentation of the Result Assessment Tool. Working paper. https://doi.org/10.17605/OSF.IO/3Z4DF

Schultheiß, S. & Lewandowski, D. (2023). Evaluating the usability of the Result Assessment Tool (RAT) using User-centered design (UCD) principles. Working paper. https://doi.org/10.17605/OSF.IO/V4QT9

Student work

2022. Studentische Arbeit zu Verzerrungen in Bibliothekskatalogen. [Research project]

Günther, Markus, 2016. Welches Weltbild vermitteln Suchmaschinen? Untersuchung der Gewichtung inhaltlicher Aspekte von Google- und Bing-Ergebnissen in Deutschland und den USA zu aktuellen internationalen Themen. [Master's thesis]

Stempel, Anne, 2016. Suchverhalten in Internet Suchmaschinen – Analyse der Unterschiede in den Suchanfragen von Senioren im Vergleich zu Teenagern. [Bachelor's thesis]

Podgajnik, Lea, 2013. Relevanzanalyse niedrig gerankter Google-Suchergebnisse auf informationsorientierte Suchanfragen mit dem Relevance Assessment Tool. [Bachelor's thesis]

Gather, Alexandra, 2013. Suchmaschinen und Sprache : eine Studie über den Umgang von Google und BING mit den Besonderheiten der deutschen Sprache. [Master's thesis]

Günther, Markus, 2012. Evaluierung von Suchmaschinen : Qualitätsvergleich von Google- und Bing-Suchergebnissen unter besonderer Berücksichtigung von Universal-Search-Resultaten. [Bachelor's thesis]

Sünkler, Sebastian, 2012. Prototypische Entwicklung einer Software für die Erfassung und Analyse explorativer Suchen in Verbindung mit Tests zur Retrievaleffektivität. [Master's thesis]

Project team

Sebastian Sünkler

Dirk Lewandowski

Tuhina Kumar

Sebastian Schultheiß

Nurce Yagci

Daniela Sygulla

Sonja von Mach

Funded by the German Research Foundation (DFG – Deutsche Forschungsgemeinschaft):

Funding period: 08/2021 - 07/2024, grant number 460676551.